Using surveys to represent the student voice and demonstrate the quality of the experience

As I write, the future of the UK National Student Survey (#NSS) is under review.

Led by the Office for Students (OfS), the review and accompanying consultation (phase one of which was published in late March 2021) were launched in direct response to a Government policy paper published in September 2020, which caught many in the sector unawares in the extent to which it questioned the role and remit of the NSS and its link to quality.

For many in the sector, the NSS and other sector-wide surveys, play a vital role in maintaining standards – rather than supposedly driving them down.

Likewise, instead of purportedly creating an administrative burden, the focus of institutional resources in maximising response rates and conducting full analysis and action on the results signifies a genuine desire to represent the student voice fully and to ensure it is at the heart of institutional change.

Student surveys and polling represent some of the most powerful tools available to represent students’ views on their experiences, providing a statistically valid complement to other more direct or in-depth approaches to gathering feedback from students.

In our sector in particular, survey findings generate headlines, and, crucially, can influence policy. Robust sector-wide surveys, and the insight they generate, draw attention to issues that require action both at institutional and sector level, sparking debate and discussion and generating further research activity. At the heart of this is the principle of representing the student voice and ensuring it can make a difference.

At Advance HE, we operate three sector-wide student surveys:

- The UK Engagement Survey (UKES)

- The Postgraduate Taught and Postgraduate Research Experience Surveys (PRES and PTES)

- The Student Academic Experience Survey (SAES)

These cover undergraduates, through the UK Engagement Survey (UKES), and postgraduates – the Postgraduate Taught and Postgraduate Research Experience Surveys (PRES and PTES).

In addition to this, in partnership with HEPI, we produce the Student Academic Experience Survey (SAES), which is an annual poll of full-time undergraduate opinions on a range of aspects impacting their experience.

Between them, these surveys gather the views of more than 150,000 students annually, with direct engagement from around 120 institutions. This of course requires real commitment from the participating institutions and crucially from the students who spare their time to respond while dealing with multiple pressures.

However, with this commitment comes an undertaking, on behalf of those using the data, to genuinely listen to what is said and to use it to maximise the quality of the academic experience.

The link to quality?

One of the main criticisms made by the Government which prompted the review of the NSS was that it is ‘exerting a downward pressure on quality and standards’ – a claim that generated some robust defence.

It is perhaps unsurprising to many that the phase one report from the Office for Students found no evidence of any lowering of standards. However, the fact that this criticism arose at all – and prompted a major review – indicates that we may need to work harder to emphasise the link between capturing the student voice via surveys and the issue of course quality.

One established method of emphasising this link is through the concept of student engagement, as measured by mechanisms such as the National Survey of Student Engagement (NSSE) in the United States and Advance HE’s UKES, which was developed directly from it.

There are also well-established engagement surveys in China, South Africa, the Republic of Ireland and several other countries. Specifically, student engagement measures how students spend their time in ‘activities and conditions likely to generate high-quality learning’.

By measuring time spent in these activities, the data provide a direct view of student development and the influences on this – an explicit link to quality.

Impact of activities on skills development

Source: UK Engagement Survey 2019

This is illustrated in the chart above, using data from UKES 2019, focusing specifically on time spent in extra-curricular activities.

The chart shows the percentage difference in the development of each skill between students who participated in each activity compared to students who did not participate at all. For example, levels of career skills development were 13 percentage points higher among students who spent time in sports and societies compared to those who did not.

This example highlights the role extra-curricular activities can play in the development of self-reported skills. Logically, levels of development are greater (with one exception) among students who had spent time in each activity, but the relative differences are crucial here. Sports and societies, as well as volunteering, link very strongly to development of most skills, while working for pay and caring show weaker connections.

As well as highlighting the importance of creating opportunities for students in particular areas, this analysis also identifies implications for how students are supported during the pandemic, as data from UKES 2020 identified that students were having to spend more time working for pay and caring – the areas with lower links to skills development.

Unlike some other parts of the world, the concept of student engagement has not fully gained traction in the UK to date among policymakers and, in some cases, within institutions. Arguably, part of this is due to the presence of the NSS as the dominant survey in the undergraduate landscape, although there are many institutions that run both UKES and NSS effectively alongside each other.

However, given its clear link to quality and its potential for global comparison, it will be fascinating to see if larger parts of the sector take the opportunity to promote and implement the concept of measuring student engagement as part of their student voice strategies moving forward.

Representing the postgraduate voice

An aspect where the UK higher education sector is arguably one of the leading global exponents is through its systematic measurement of the postgraduate experience, which is achieved through a voluntary focus on enhancement rather than through regulatory means. Through established annual measures like PTES and PRES, with around 100 institutions annually, UK higher education institutions demonstrate a real commitment to capturing the views of postgraduate students and researchers.

This was particularly evident during the 2020 survey season, which coincided with the onset of the pandemic and the first UK lockdown. Under substantial pressure and with resources stretched, a significant cohort of institutions went ahead with their postgraduate surveys, capturing the student experience at a uniquely challenging but vital time. As well as providing their own data to drive enhancement activities, the level of participation helped create a sector-wide dataset which straddled the introduction of lockdown and enabled an early assessment of how it impacted the student experience.

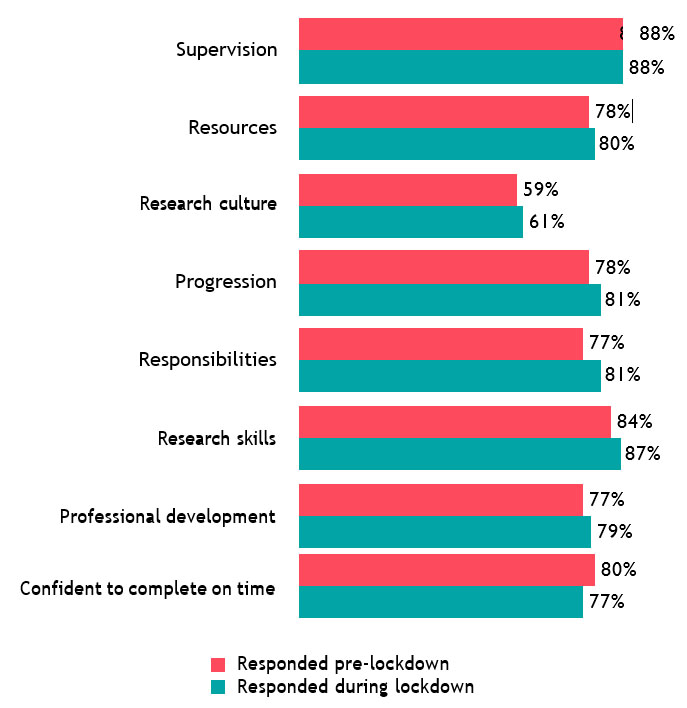

The example in the chart below uses data from the 2020 PRES at sector level and provides evidence of a strong institutional response to the challenges faced, while also highlighting some of the early impacts on postgraduate researchers’ (PGRs) future plans.

Strikingly, most major aspects of the experience were rated higher among PGRs responding during the spring 2020 lockdown.

It might be argued that the PGR experience would be inherently less impacted by a sudden move to online delivery than undergraduate or postgraduate taught delivery, but this would be to underplay the importance of PGR supervisors and other staff in a PGR-facing role maintaining levels of support, resources and development opportunities while dealing with a largely unforeseen crisis.

The fact that all key aspects improved is a strong endorsement of the sector response, illustrated by direct comments from PRES which shine a light on the quality of the support received:

‘Under the specific conditions of Covid-19 I felt a huge effort from my Faculty/Department/supervisors/Administration team and students, to make sure all research degree students were doing well and keep up our motivation.’

‘Due to Covid-19, all facilities closed. My supervisor went out of their way to loan small equipment to me to be able to continue to collect data and work from home.’

‘My supervisor is excellent in terms of academic knowledge and always supports me, such as giving a lot of comments and feedback back to me after we have meetings. Moreover, when I feel confused and not so sure about some parts of my research, they are always here to support even during Covid19.’

What the results also tell us is how the pandemic impacted on the timings of their research, with PGRs’ overall confidence to complete on time falling during the lockdown period (as shown in the above chart):

‘Covid19 means that my workload has doubled. Therefore I may be delayed in finishing.’

‘Unfortunately my research is on hold due to Covid-19. I am unable to collect data and have to wait till my stakeholder groups return, which may not be until late 2020.’

‘Covid-19 has detracted from my time (full-time NHS role) and the availability of my supervisors.’

‘Because of the Covid-19 lockdown, the first part of this growing season has been lost so an extension may be requested at the end of the project.’

This finding is perhaps logical given the exceptional circumstances. However, while this is clearly a concerning situation for those involved, the gathering of feedback in this way provided a vital opportunity for these concerns to be heard and for action to be taken at an institutional level to provide support and flexibility to help mitigate the impacts wherever possible.

Do students feel their voice is heard?

As well as capturing a range of trackable data on the overall quality of the student experience, the Advance HE / HEPI Student Academic Experience Survey features an annually changing series of questions which reflect key issues of particular interest to the sector and policymakers at the time.

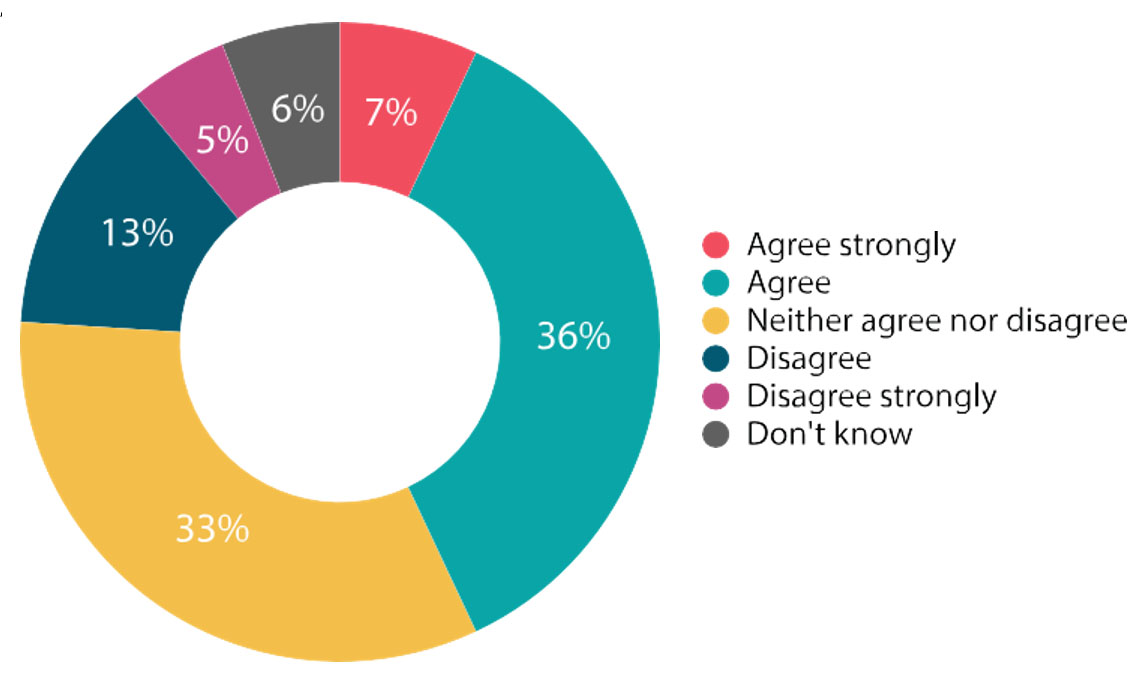

In the light of the pandemic, one of the key questions included in the 2021 survey was whether students felt their voice was heard and recognised by their institution.

Overall, just over four-in-ten students agreed, or agreed strongly, that this was the case. Only 18 per cent disagreed, but a further one-in-three gave a neutral response. Although there is no previous data to compare to, the finding that less than half of students actively feel their voice is heard is perhaps lower than we might have hoped.

These data imply there is more to be done to capture and represent the student voice across a range of approaches. While students are not necessarily referring only to surveys and polling when considering this question, these approaches can represent some of the most effective ways of doing this when all stakeholders work together.

Rather than creating a burden or driving down standards, surveys and polling can provide a clear measure of the quality of the student experience, as well as representing how institutions have adapted to challenging times across all levels of provision.

The challenge now is to bring together institutions, students and policymakers to recognise this and to focus on using these tools to make the student experience the best it can be.

Jonathan Neves, Head of Business Intelligence and Surveys, Advance HE

What is the student voice? |

|

Produced by the Higher Education Policy Institute and with support from EvaSys, What is the student voice? Thirteen essays on how to listen to students and how to act on what they say (HEPI Report 140) edited by Michael Natzler, is a new collection of essays which provides a range of views on what and where the student voice resides and how to listen and respond to it. The collection covers a wide range of topics from the role of sabbatical officers as governors to the National Union of Students, mature students and includes contributions from survey experts, sabbatical officers and a vice-chancellor as well as interviews with the Office for Students’ Student Panel. Including a chapter by Nick Hillman, HEPI Director, the chapters are:

|

Responses